Debugging Machine Learning Models: Effective Tools and Techniques

Sanam Malhotra | 6th May 2020

“To err is human; To Edit, Divine.” When rectifying errors, machine learning models are no different from our everyday life. The entire development cycle of AI solutions demands profound knowledge, precision, preemptive actions, and corrective measures to complete the automation journey. However, models can still deliver erroneous, socially discriminating, or unethical outputs. As experiential providers of machine learning development services, Oodles AI shares some effective techniques for debugging machine learning models.

Effective Techniques for Debugging Machine Learning Models

1) Going Basic With Model Assertion

Making accurate predictions is one of the most mainstream use cases of machine learning models. From predicting the age of potential customers to anticipating disease spread, predictive analytics is being widely used to build futuristic solutions. However, data scientists and developers often struggle to train machine learning models for projecting accurate, reliable, and effective recommendations.

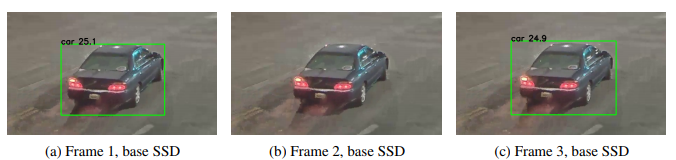

Examples of “flickering” and “overlapping” objects in video detection. Such ML model errors can drastically impact the progress of accurate traffic mapping and autonomous vehicles, explain the University of Stanford researchers.

Examples of “flickering” and “overlapping” objects in video detection. Such ML model errors can drastically impact the progress of accurate traffic mapping and autonomous vehicles, explain the University of Stanford researchers.

Model assertions are the most classic methodologies of ensuring software quality now used for debugging machine learning models and improving performance. They are post-prediction business rules that are written as functions over model outputs for correcting model predictions.

The technique of model assertions is an effective debugging strategy that enables providers of artificial intelligence services to-

a) Identify large chunks of errors effectively

b) Improve model performance by significantly reducing the number and frequency of errors

c) Reduce labeling costs by automatically generating weak labels where assertion fails.

For debugging domain-specific machine learning models, there are several ways of applying model assertions, such as-

(i) Runtime monitoring

Used to identify and log incorrect behavior while the model is in action to prevent accidents.

(ii) Corrective action

Used to return control to the human operator during runtime monitoring.

(iii) Active learning

Used to detect difficult data that is harder for the model to label.

(iv) Weak supervision

Used as an API for consistency assertions that determine if data between attributes and identifiers run consistently.

Expansive use cases of model assertions expand from analyzing correct news and running autonomous vehicles to performing video analytics and classifying visual data.

Also- read- Strengthening Enterprises with AI in Video Surveillance

2) Debugging Deep Learning Models Using Flip Points

The complicated structure of deep learning models makes them highly vulnerable to making inaccurate predictions. While Deep Neural Networks (DNNs) are highly robust solutions for multidimensional projects, their “black-box functions” discourage developers from using the multi-layered models.

The flip points inform us about the least changes occurring in data that affect the model’s prediction, classifications, and social biases. Flip points enable researchers to understand the decision boundaries of deep learning models and classifiers.

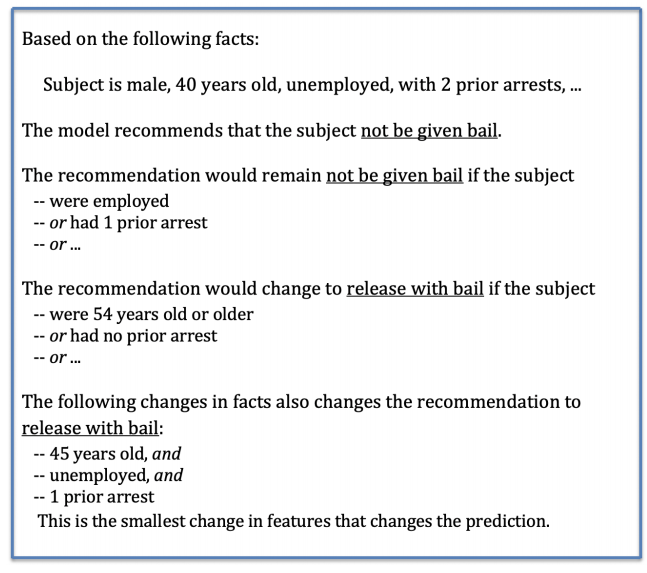

For example, while recommending punishment sentences to accused criminals, flip points can be used for debugging deep learning models by asking-

a) What is the smallest change in a single feature that changes the model output?

b) What is the smallest change in a set of features that affect outputs?

Researchers at Yale University exemplify the information that can be derived by calculating flip points.

Flip points can identify flaws in the model and debug the model by generating synthetic training data to correct the flaws. They help us debug a machine learning model by-

a) Interpreting the output of a trained model

b) Diagnosing the behavior of trained models, and

c) Using synthetic data to alter decision making

Flip points can effectively deal with flaws and can reshape the model. They give insights into how and why the model is making certain predictions to better understand model complexities and errors.

Also read- AI-driven Conversions: Computer Vision Applications for eCommerce

Debugging Machine Learning Model With Oodles AI

Building AI solutions requires precision, alertness, and appropriate corrective actions. At Oodles, we ensure that real-world applications of machine learning and deep learning generate value effectively.

While model development is a highly complex process, our team uses preventive measures to maintain the quality, effectiveness, and performance of the models.

Our strategies for debugging machine learning models involve model assertions, flip points, and intermediate options such as ConX or Tensorboard. We have experiential knowledge in building industry-specific AI solutions including-

b) Employee Attendance with Deep Video Analytics

c) Data extraction from Identity Card using AI-OCR systems, and many more.

To learn more about our artificial intelligence services, connect with our AI development team.