AI-driven Video Frame Interpolation: Automating Animation and Videos

Sanam Malhotra | 26th May 2020

One of the handful of businesses gaining steam during COVID lockdown is online video streaming. From web series and Internet TV to online learning and marketing, video content has dominated the digital landscape with maximum user attention. However, high-quality and immersive visual experience that is imperative for business profitability is painstakingly difficult to produce, until we found artificial intelligence (AI). This article highlights one such AI-driven video frame interpolation technique using neural networks.

Simply put, video frame interpolation intelligently produces missing video frames between the original ones to enhance the video’s quality and resolution. To expand the scope of AI development services, Oodles AI explores how deep learning-powered video optimization can improve customer experience across business channels.

The Science Behind AI-driven Video Frame Interpolation

Deep learning offers several methodologies such as Adaptive Separable Convolution, Deep Voxel Flow, and others for synthesizing video frames. This article focuses on “Depth-aware Video Frame Interpolation”, or DAIN that deploys interpolation algorithms to detect the occlusion in videos using their depth information. The technique was first published by Wenbo Bao and a team of other Google researchers, who propose,

“A depth-aware flow projection layer that encourages sampling of closer objects than farther ones.”

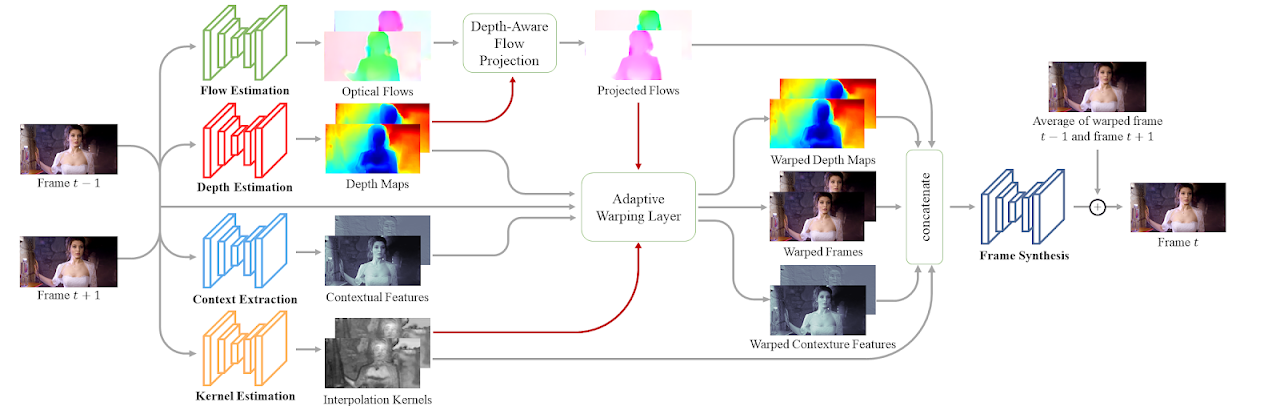

The proposed DAIN model learns about the hierarchal features by collecting contextual information from neighboring pixels in each frame. For synthesizing the output frames, the tool wraps the input frames along with depth maps, and contextual features based on the optical flow and local interpolation kernels, as elaborated in the architecture below-

As apparent, the model comprises of five submodules, namely the flow estimation, depth estimation, context extraction, kernel estimation, and frame synthesis networks.

The model can efficiently enhance a video’s quality and temporal resolution by raising the rate of frames to as much as 60 per second.

Coupled with colorization techniques, the DAIN tool can sharpen and smooth the visuals and eliminate the blurriness in old video clips to provide an immersive visual experience, as compared below-

Similar to other machine learning solutions like image upscaling and voice cloning, this AI-driven video frame interpolation mechanism is finding many buyers across verticals to maximize user experience.

Let’s explore how businesses can provide optimum engagement and immersive visual experience to their customers using DAIN.

Potential Use Cases of AI-driven Video Frame Interpolation

AI-driven video frame interpolation has gained significant traction in the computer vision community to fuel highly innovative applications, such as-

1) Slow Motion Video Generation

Slow Motion or SloMO is an emerging Computer Vision technique aimed at interpolating video frames to create smooth and high-resolution video streams. The AI-driven video frame interpolation, DAIN churns the frame rate and resolution from the corresponding low-resolution and low frame rate video frames.

A deep learning practitioner, Noomkrad demonstrates the super-resolution capability of DAIN for the survival horror game, Resident Evil 2-

The channel demonstrates the difference between the original and interpolated result video in 4X slow motion. While the original video becomes jerky, the DAIN tool achieves a smoother view by accelerating the frame rate in 4X.

The applications of slow-motion videos using AI-driven video frame interpolation are far-reaching including sports, research, cinema, video games, and more.

2) Automated Animation

Animated designs, including 2D and 3D animations, are widely used across industries from movies, cartoons to branding, and advertisement. The immediate benefits of optimizing animation quality involve optimum engagement and impact that eventually upturns a brand’s viewership and profitability.

Here’s a stark comparison between the level of clarity achieved with the DAIN-enabled 60fps video frame versus the original anime.

In addition, animation with AI-driven video frame interpolation has vast scope for automating high-resolution marketing campaigns for businesses across industries. 3D product demonstrations and video content are increasingly flourishing among eCommerce portals to boost customer satisfaction and loyalty.

Also read- Upscaling Images With Machine Learning for Optimum Resolution

Deploy AI-driven Video Frame Interpolation with Oodles AI

In the digital age, visual content is creating the highest impact on customers across borders. With an intent to optimize the customer outreach efforts for businesses, Oodles AI offers DAIN-enabled high-resolution video generation services. Our expertise in handling neural networks has enabled us to build and deploy various predictive AI solutions, such as-

a) Image Caption Generator, and

b) Facial Recognition for employee attendance

In addition, our span of AI services expands to recommendation engines, predictive analytics, computer vision, and AI-powered chatbot development services.

Connect with our team of AI developers and analysts to know more about our AI capabilities and solutions.