AI-backed Technologies for Visually impaired users

Anmol Kalra | 30th August 2021

We, humans, have evolved enormously towards programming and technology.

Considering ourselves as the “global brain,” we have managed the natural collective intelligence and the vision of flexibility to improve it even better. Behind this vision lies the assumption that Artificial Intelligence (AI) can support us by performing tasks better and distributing our global brain’s cognitive workload.

The role that AI plays in human life is increasing exponentially.We must be adamant about understanding the basic structure of AI and harness the capabilities of our minds when it meets machines. The impact of emerging technologies like AI is not likely to pale in the coming time.

One of the most important questions we seek to answer is how to incorporate this AI that we have developed for our prosperity?

AI has developed and evolved various fields and industries, including finance, education, healthcare, transportation, and more. Just like every sector needs an upgrade, your business also needs an expert who willingly helps us look at AI through the lens of business capabilities.

At Oodles AI, we use deep neural network libraries and Machine learning algorithms to provide OCR, Predictive analysis, and Chatbot development, with various other services which we think can make life easier for these special gifted users.

Like every other sector, the healthcare industry implies various AI tools and software to increase efficiency and output. Tools like Optical Character Recognition (OCR) and Deep Neural Networks are already helping the medical sector boost its potential. Probably it’s just a matter of years that the white coat doctors would be replaced by bots and humanoids, giving prescriptions and doing treatments in-hands with trained surgeons.

From finding clinical solutions of problems to storing Electronic healthcare records, AI has come a long way. But to treat each patient in accord with the best available tech and continually update that knowledge to benefit the subsequent patients is a grand-worthy challenge for AI.

Table of Content

How do AI-backed tools and Software help the visually impaired?

Challenges faced by visually impaired.

Technologies for people with vision loss disability

AI-backed Assistive Technology

Screen readers

Text-to-speech (TTS) Synthesizer

Braille displays

Fortunately, patients diagnosed with low vision, partially sightedness, and total blindness may benefit from AI and Machine learning tools to make their lives less unsettled.

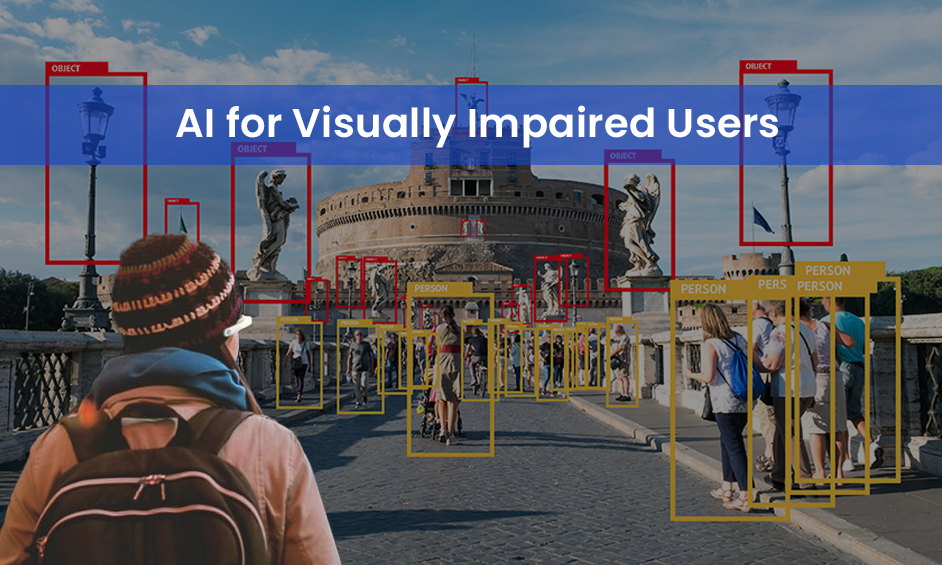

How do AI-backed tools and Software help the visually impaired?

Challenges faced by visually impaired.

Visually impaired people do lead an everyday life with their style of doing things. But, they face various troubles due to the inaccessibility of infrastructure and social challenges.

Most of the time, they tend to get dependent on another person. But with the help of the right AI tools, they too can start living a normal and healthy life.

(a) Identifying the correct text in the document

(b) Identifying the right path to travel, and

(c) Assistance in climbing up the stairs / roads

These are some of the challenges which a visually impaired person faces daily. But they are likely to be solved using AI-backed technologies.

With the amalgam of various technologies, AI can turn out to be a life-saving tool for visually impaired users.

1. IBM WATSON AI technology, one of the world’s premier accessibility research facilities developed by IBM, provides insight into how AI is beginning to transform the lives of the visually impaired. On one occasion, it even cross-referenced 20 million oncology records and correctly diagnosed a rare leukemia condition in a patient.

Oodles AI is specialized in applying IBM Watson-powered machine learning technology to respond to natural language and automate data conversation across various chatbot platforms. These platforms are highly suitable for visually impaired persons to interact in their daily life.

2. Google’s AI eye doctor is an initiative taken by Google. They are working with Indian doctors to develop an AI system that can examine and scrutinize multiple retinal images and rectify diabetic retinopathy, which causes blindness. It is also working on diagnosing retinal disease for several years now.

3. Think of a wearable device that can capture the sight you see and then display it to you with very high proximity and preciseness. eSight does the same. It captures the high-quality view you see and displays it on two high-resolution OLED screens, directly in front of your eyes. Custom optics and established algorithms based on neural networks enhance the picture to maximize functional sight. The result?

Synaptic activity from the remaining photo-receptor function in the user’s eyes is stimulated to provide the brain with increased visual information to naturally compensate for gaps in the user’s view of sight.

4. Several other revolutionary tools and software like Seeing AI, harness the actual potential of AI and machine learning to provide a deep neural solution to various vision-related issues. It also uses a bar-code scanner to identify the product type and convert it into a voice command to be heard by the user.

Optical Character Recognition (OCR) also enables users to take pictures of texts and perform various pre and post-processing steps, and read them back to the user with the device’s screen reader. For example, a user can click an image of a document or printed recipe and listen to it through their smartphone.

Technologies for people with vision loss disability

When we think of technology from the perspective of people with vision loss, we can summarise it into two broad categories:

♦ General technology: It includes Computers, smartphones, GPS devices, etc.

♦ Assistive technology: It includes products specifically designed to help people with vision loss. Screen readers for blind patients, screen magnifiers for low-vision users, video magnifiers, and other devices for reading and writing purposes with low vision, braille watches, and braille displays.

The assistive technology takes the assistance of AI and deep learning to incorporate the behavioural analysis of the user and then add that to their advantage.

AI-backed Assistive Technology

♦ Screen readers: They are AI-backed software programs that allow visually impaired users to read the text displayed on the computer screen with a braille display or a speech synthesizer. It is an interface between the computer’s OS, its applications, and the user. The user sends commands or input by pressing different keys on the braille display to instruct the speech synthesizer to speak. The input can recommend the synthesizer to read or spell a word, read a full screen of text, find and identify a string of text on the screen, announce the location of the item which are in focus.

In addition, it allows users to perform functions, such as locating text displayed in a specific color and order, reading highlighted text, and reading pre-designated parts of the screen on demand. Users can also use the spell checker in a word processor of a spreadsheet with a screen reader.

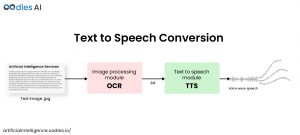

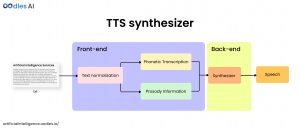

♦ Text-to-speech (TTS) Synthesizer: Text-to-Speech (TTS) is the process of converting text into sound. It can also be said as an artificial production of human speech. An AI-backed system used for this purpose is called a speech synthesizer, implemented in software and hardware. TTS consist of a Front End and a Back End functioning unit.

The text-to-speech (TTS) device consists of two main modules, the image processing module, and the voice processing module.

➡ The image processing module captures the image using the camera, converting the image into text (OCR converts .jpg to .txt form).

➡ The voice processing module changes the text into sound and processes it with specific physical characteristics to understand the sound (converts .txt to speech)

The TTS synthesizer consists of synthesizing units, such as Concatenative speech synthesis and the unit selection-based speech synthesis procedure.

The essential qualities of a speech synthesis system are naturalness and intelligibility.

Naturalness describes how closely the output can sound like human speech, while Intelligibility is the comfort with which the outcome is understood. The two primary AI-backed technologies used for generating synthetic speech waveforms are concatenative synthesis and formant synthesis.

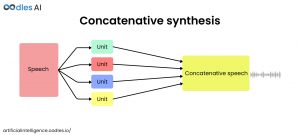

➡ Concatenative synthesis: It is based on stringing together the tiny segments of recorded speech and generating a single output. Generally, the concatenative synthesis produces the most natural-sounding synthesized speech among all TTS synthesizers.

➡ Formant synthesis:- Commonly known as rules-based synthesis, it does not use human speech samples at runtime. Instead, acoustic model parameters such as fundamental frequency, voicing, and noise levels are varied over time to create a waveform of artificial speech used as input.

The process involved in TTS synthesizers:

♦ Text Processing

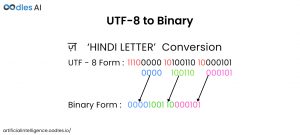

Text input to the synthesizer can be in a transliterated form or Unicode Transformation Format (UTF) UTF-8 form. If incoming text is in the UTF-8 format, it will be converted to the transliterated form/Binary form before further processing.

Here we can see (UTF to Binary) conversion of a Hindi Letter

The Text processing module consists of Pre-processing and Syllabication modules. The text in transliterated form is first pre-processed to remove invalid characters in the text. The pre-processed text is further passed on to the syllabication module.

(a). The first step involves the formation of a speech database. We need to select the language and form large-scale speech corpus data, used widely in our verbal communication. In this speech corpus or sentences, we try to cover and secure all the possible conversions related to our daily life.

(b). Speech recording is the second important task. Voice data from different speakers with clear pronunciation is recorded using discrete parameters. Usually, PRAAT is used for this precise functionality.

(c). The most crucial process includes speech data segmentation and labelling. The quality of synthesized speech directly depends on the accuracy of the labelling process. Neural networks work to segment and remove the noise of the recorded speech.

Also read:- Copy That: Realistic Voice Cloning with Artificial Intelligence

(d). The next step includes Unit Selection and Speech Generation.

The input data received from the language processing module are used as specific parameters. These parameters contain data on the fundamental frequency and duration of the phoneme pronunciation. Then as the module receives input data, it checks by detecting the number of spaces present in the entered/input text. The text is further split into words, labels, and phonemes. It then searches the database to fetch the corresponding wave file. It strings together all the small quant-sized wave files and is now ready to generate the speech.

♦ Braille displays: It provides access to information on the text on a computer screen by raising and lowering different pins in braille cells. A braille display can show up to 80-100 characters from the screen display and is refreshable. It changes simultaneously when the user moves the cursor around on the screen, using the cursor routing keys or the command keys. The display is usually attached to a text synthesizer, which uses a fixed set algorithm to transfer the displayed text into electronic output in the form of braille pins.

Build resilient OCR and TTS process module with Oodles AI

There are various AI-backed technologies, which are being designed to help those with visual impairments, and it is nothing short of an achievement. But the key is balance—the balance between the use of AI-backed tools and the user’s ability to incorporate with these.

Oodles AI brings over ten years of experience to the table to build resilient solutions for various AI domains using next-generation technologies and applications. We have an expert team dedicated to providing AI-driven OCR and Text-recognition solutions and are effectively providing actionable insights for better decision-making.

We are accomplished experts providing AI-backed services across various domains. Our experiential knowledge of machine learning and deep learning Neural Networks empowers us to provide effective AI solutions, including-

- Predictive analysis services

- Chatbot development for better customer interaction

- Optical Character Recognition for healthcare

Join hands with our Oodles- AI team to explore how AI-backed technologies can provide resilient solutions for visually impaired users and help them experience a better life.